After having the mount for 3 years, I finally got my monitors mounted on my desk. I have so much more usable desk space, now.

Next step is to replace the 23'' old Asus I have as my secondary, with another 27'' monitor to match my PG27UQ. Almost did that today, but now that they're mounted, yeah, it's gonna bug me until I address it.

Next step is to replace the 23'' old Asus I have as my secondary, with another 27'' monitor to match my PG27UQ. Almost did that today, but now that they're mounted, yeah, it's gonna bug me until I address it.

By Veckrot Go To PostGeforce cards now need there own nuclear power plant.

By Laboured Go To PostOh it's on nowYou reckon it'll run fortnite at 250fps?

By Laboured Go To PostOh it's on nowThe 3080 having 10gb is really disappointing if true. If you're upgrading from a 1080 Ti or 2080 Ti, the 3090 is the only way to not lose vram. An 11gb RTX 3080 could have been a solid upgrade for 1080 Ti owners that didn't want to go for the 3090.

By Celcius Go To PostThe 3080 having 10gb is really disappointing if true. If you're upgrading from a 1080 Ti or 2080 Ti, the 3090 is the only way to not lose vram. An 11gb RTX 3080 could have been a solid upgrade for 1080 Ti owners that didn't want to go for the 3090.Fully agree on that. Even if it outperforms the 2080Ti on the whole, it would feel wrong to take a downgrade in total VRAM.

If the pricing rumours are true, I’m wondering if we’ll see a cutdown 3090 with 11-12GB to fill the gap in market between the 3090 and 3080.

3080 Super in 6-12 months? 3085? 3080Ti? Who knows.

Yeah, I think a 3080Ti in the upcoming months. Or a Super variant. Too much of a price gap between 3090 and 3080.

Got the bookshelves for the counter top to go on.

Girlfriend's office chair arrived on time.

Counter-top never arrived yesterday when it was supposed to, they returned it back to Wickes and it's taken 24 hours to get a reply off them to find out what the fuck they're playing at.

Fewmin'.

Girlfriend's office chair arrived on time.

Counter-top never arrived yesterday when it was supposed to, they returned it back to Wickes and it's taken 24 hours to get a reply off them to find out what the fuck they're playing at.

Fewmin'.

Back on team wireless. Picked up the Logitech G915 TKL KB today. I've been using it throughout the say, and the GL switches are definitely of a different feel than Cherry's. These are "GL-Clicky" switches, which I automatically assumed to be the Cherry Blue equivalent. They are much quieter than those.

Really you're paying for the aesthetics with this keyboard. It does look really good in person. The slim profile with the brushed aluminum finish and Logitech's RGB, which I've always preferred over other manufactures, definitely gives it a premium look. Supposedly has great battery life too. It is $225 though. It should.

Also got picked up the wireless G502. Nothing different from the standard outside of Powerplay support, which is great. I have the OG 502, but the cable got fucked up awhile ago. Was always one of my favorites.

With my monitors mounted, I only have one cable on my desk, and that's from the Powerplay pad

Really you're paying for the aesthetics with this keyboard. It does look really good in person. The slim profile with the brushed aluminum finish and Logitech's RGB, which I've always preferred over other manufactures, definitely gives it a premium look. Supposedly has great battery life too. It is $225 though. It should.

Also got picked up the wireless G502. Nothing different from the standard outside of Powerplay support, which is great. I have the OG 502, but the cable got fucked up awhile ago. Was always one of my favorites.

With my monitors mounted, I only have one cable on my desk, and that's from the Powerplay pad

By inky Go To PostMicro B USB charging port tho. Incredible for such a premium product.

I hear that. It's overpriced, but pretty sweet.

. I've been wanting the KB since it was announced. Used the excuse of having newfound desk space, and wanting to eliminate remaining wires, to get it lol.

. I've been wanting the KB since it was announced. Used the excuse of having newfound desk space, and wanting to eliminate remaining wires, to get it lol.Supposedly it holds 40+ hour charge at full brightness with RGB enabled. I've got the USB charge cable hooked to my monitor and tucked away on the mounting bracket, out of sight.

@Zabo (or anyone else with, feel free to chime in)

I think I need a different desk mount. Mine is relatively cheap and I have the main monitor on the right, and a smaller on the left. The problem is I have to turn my head slightly to view the one on the right, and I can see this causing strain over time.

Ideally I think I need a mount that would allow one arm to move such that the 27'' monitor would be directly in front of me, and the secondary monitor off to the side, in a portrait format.

Something like this https://www.amazon.com/AmazonBasics-Premium-Single-Monitor-Stand/dp/B00MIBN16O/

Pretty decent amount of $$. Would something like this work, or am I looking at a situation where two single arm mounts would better.

I think I need a different desk mount. Mine is relatively cheap and I have the main monitor on the right, and a smaller on the left. The problem is I have to turn my head slightly to view the one on the right, and I can see this causing strain over time.

Ideally I think I need a mount that would allow one arm to move such that the 27'' monitor would be directly in front of me, and the secondary monitor off to the side, in a portrait format.

Something like this https://www.amazon.com/AmazonBasics-Premium-Single-Monitor-Stand/dp/B00MIBN16O/

Pretty decent amount of $$. Would something like this work, or am I looking at a situation where two single arm mounts would better.

Don't @ me, I've ascended to wall mounting.

I went with two single arms, primarily because the secondary drop-down monitor was a later purchase, but also because it allowed me total freedom with positioning. Can vouch for the AmazonBasics one, I have the wall-mountable version, two of them, and they've been super solid.

Wait, so will you or will you not be moving your monitors around?

I went with two single arms, primarily because the secondary drop-down monitor was a later purchase, but also because it allowed me total freedom with positioning. Can vouch for the AmazonBasics one, I have the wall-mountable version, two of them, and they've been super solid.

Wait, so will you or will you not be moving your monitors around?

By Zabojnik Go To PostDon't @ me, I've ascended to wall mounting.

I went with two single arms, primarily because the secondary drop-down monitor was a later purchase, but also because it allowed me total freedom with positioning. Can vouch for the AmazonBasics one, I have the wall-mountable version, two of them, and they've been super solid.

Wait, so will you or will you not be moving your monitors around?

This is what I want. To be able to move them around as I see fit. The mount I'm using now is ok, but I can't stack the monitors for example, or put them in the type of configuration I said above. I

I think getting two separate arms is a better idea than a double armed mount, tbh. Let's you do much more.

Keep in mind the height of your monitors if you want to stack them. You will need a tall mount for that.

Keep in mind the height of your monitors if you want to stack them. You will need a tall mount for that.

cant believe i'm about to drop something like 700 trumps on a graphics card

first time for everything

first time for everything

Today Palit has registered 171 products with Eurasian Economic Commission. Such a number of codes does not mean Palit will ever launch this many products. It is simply a preliminary submission to EEC, as we have seen many times before. Some of those products make it to the market, others do not. It is worth noting though, that some of those codenames could simply refer to specific regions, with the only difference being the packaging design.

https://videocardz.com/newz/palit-submits-geforce-rtx-3090-rtx-3080-rtx-3070-and-rtx-3060-to-eec

Guessing AIB cards are gonna launch pretty much alongside the FE cards.

By Kibner Go To PostI think getting two separate arms is a better idea than a double armed mount, tbh. Let's you do much more.This. Check the range of motion of the arm(s) you'll end up buying and apply it to the monitor config you want. Don't just assume that having two fully articulated arms will allow you to position the monitors exactly how you want them. Do the math. The |- config shouldn't be a problem, but stacking monitors might be because of the height difference.

Keep in mind the height of your monitors if you want to stack them. You will need a tall mount for that.

I might just stick with my 2080 Ti for this year. Aside from it getting hotter when overclocked (and needing higher fan speeds -> higher noise :( ), it's still doing all right, considering I'm only playing at 1440p.

i hear that dr blade. especially since you're still stuck at a plebian console resolution like 1440p.

also, wireless g502 is so gud

also, wireless g502 is so gud

By HonestVapes Go To PostI'm also skipping the 3000 series, I'm happy to lower some settings here and there for 4K/60.

same mashallah.

Except at "pleb" 1440p lol

By HonestVapes Go To PostI'm also skipping the 3000 series, I'm happy to lower some settings here and there for 4K/60.

Pretty sound decision. For all the hate it gets (mostly by people who never owned the card), the 2080ti has provided a pretty good 4k60 experience for the past 2 years.

--

https://videocardz.com/newz/nvidia-geforce-rtx-3090-and-geforce-rtx-3080-specifications-leaked

According to our sources, NVIDIA is set to launch three SKUs in September: RTX 3090 24GB, RTX 3080 10GB and RTX 3070 8GB. We have learned that board partners are also preparing a second variant of the RTX 3080 featuring twice the memory (20GB). However, that model is yet ‘TBA’ and it might launch later. This post is only about RTX 3090 and RTX 3080.

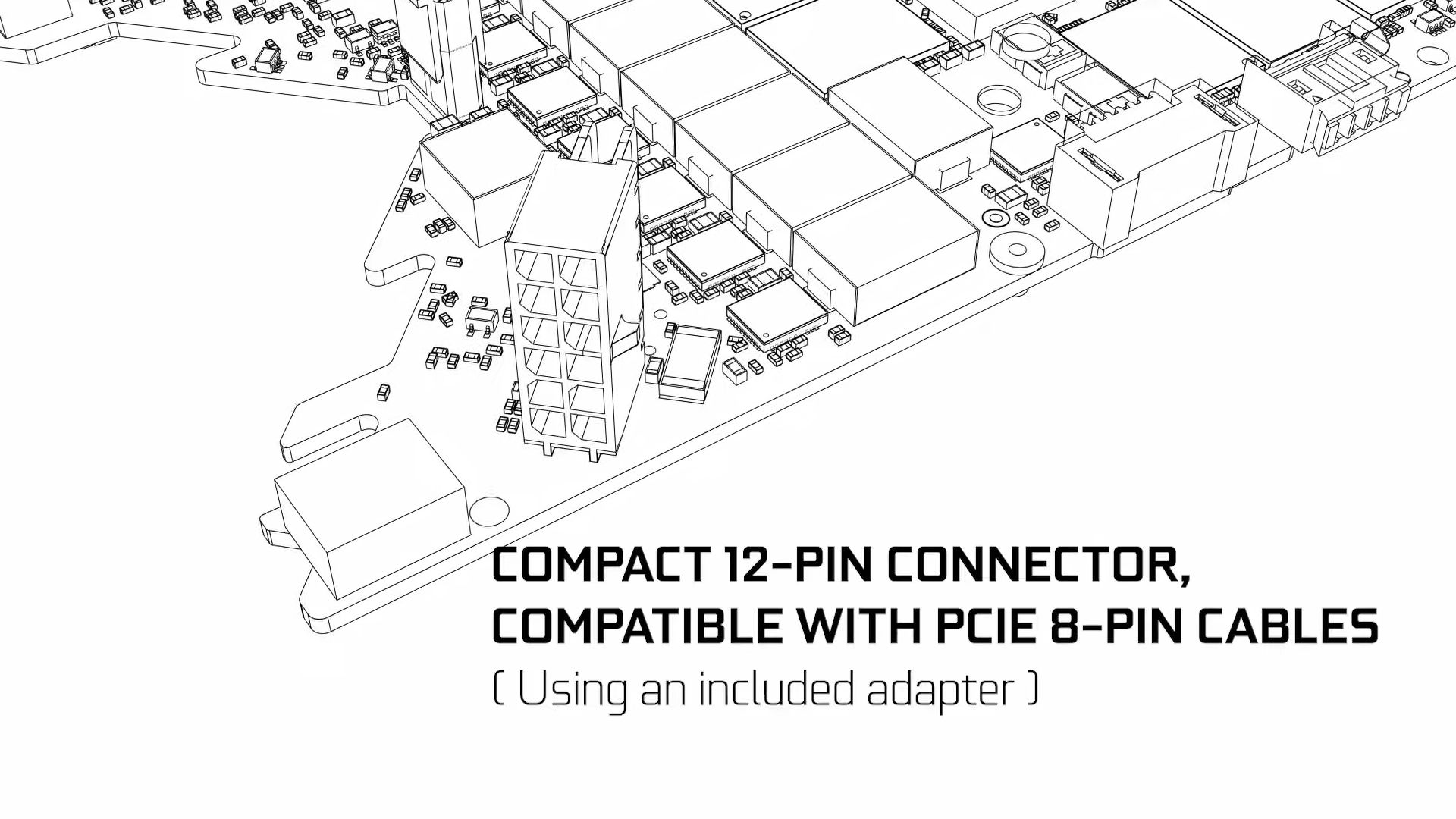

With GeForce RTX Ampere, NVIDIA introduces 2nd Generation Ray Tracing Cores and 3rd Generation Tensor Cores. The new cards will also support PCI Express 4.0. Additionally, Ampere supports HDMI 2.1 and DisplayPort 1.4a display connectors.

NVIDIA GeForce RTX 3090 features GA102-300 GPU with 5248 cores and 24GB of GDDR6X memory across a 384-bit bus. This gives a maximum theoretical bandwidth of 936 GB/s. Custom boards are powered by dual 8-pin power connectors which are definitely required since the card has a TGP of 350W.

The GeForce RTX 3080 gets 4352 CUDA cores and 10GB of GDDR6X memory. This card will have a maximum bandwidth of 760 GB/s thanks to the 320-bit memory bus and 19 Gbps memory modules. This SKU has a TGP of 320W and the custom models that we saw also require dual 8-pin connectors.

The GeForce RTX 3070 also launches at the end of next month (unless there is a change). We can confirm it has 8GB of GDDR6 memory (non-X). The memory speed is estimated at 16 Gbps and TGP at 220W. The CUDA Core specs are still to be confirmed.

We knew the 3090 would be power hungry, but even the 3080 has a TGP of 320w.

If the uptake of DLSS 2.0 becomes really consistent going forward than ya 2080tis are going to last you.

good to see more firm rumours of the 20gb 3080 variant, think I'll wait for that one. imagine getting scammed into 10gb in 2020/21

Interested to see if they've made the tensor cores more performant. I want increasingly moar RT in my life after trying Control.

By Smokey Go To PostPretty sound decision. For all the hate it gets (mostly by people who never owned the card), the 2080ti has provided a pretty good 4k60 experience for the past 2 years.

It's been solid for me. Majority of games I've played either run maxed out at 60-80FPS at 4K, or around 45-50FPS and need a few settings turned down to hit 60. Going forwards I'm happy to drop down to 1800p if I can't a get a consistent FPS via setting tweaks. Though I'm hopeful that DLSS and other rendering techniques like it will really start to go mainstream in the foreseeable future.

Now the 4080Ti, I'll be all over that.

By HonestVapes Go To PostExcited to see EVGA show off their 5 slot FTW3 variants.

At a mere 350mm length.

By Smokey Go To Post3090 is only for bois with chonky cases rip vapesEvery card is a mere single slot with a waterblock on, my friend.

I'm concerned the 3070 will only have 8 GB of ram. I sincerely hope that is enough for 1440p for multiple years.

So my current GPU uses 320W at peak and 3090 uses 350W allegedly. Just hoping my 650W PSU will hold out.

By Kibner Go To PostI'm concerned the 3070 will only have 8 GB of ram. I sincerely hope that is enough for 1440p for multiple years.

eeeeeeeeeeeeeeeeeeeeeeeeeeeeeehhhhhhhh idk mane

rtx 3070 looking a bit funny in the light, tbh. Seems to be set up perfectly for a Super or Ti variant.

By Kibner Go To PostI'm concerned the 3070 will only have 8 GB of ram. I sincerely hope that is enough for 1440p for multiple years.

If one of the rumours is true, there maybe double capacity variants of the 3070/3080 and a half capacity variant of the the 3090.

It's not unheard of I suppose, but if the cost of the memory puts a potential 16GB 3070 over the price of a 10GB 3080, then shit's just not going to sit well on a the shop's shelf.

Not long until it's all in the open at least.